Research grants and output quality assessment: Complementary or redundant?

This article originally appeared as an invited post on the LSE Impact Blog.

Grading the quality of academic research is hard. That is why last year’s Research Excellence Framework (REF) assessment was complex and lengthy. Preparation for the REF started long before 2014, on all sides, and completing it cost the sector an estimated £250m. Universities had plenty of motivation to put a lot of time and resources into their submissions, because success meant a larger allocation of “quality-related” (QR) funds, whose core research component amounts to over a billion pounds per year in England alone.

The research “outputs” that formed the basis of the REF assessment were generated in part by research funded by the UK’s research councils and learned societies, who in turn also receive their funding from the government. These funds, amounting to some £1.7bn across the UK in 2013/14, are applied for by individual researchers and collaborative groups, on the basis of research proposals that take a substantial amount of academic and administrative effort to write. More than 70 percent of those applications fail.

One might hope that these “before” and “after” assessments of research quality would be broadly concordant. And in 2013, a report commissioned by the government department responsible for the universities found that indeed, there was a strong relationship between grant success and performance in the REF’s predecessor assessment. Moreover, the authors concluded that if QR funding were allocated in direct accordance with grant income levels, there would be little general effect on the amount each higher education institution receives — although such an arrangement would increase the concentration of funds to the most successful universities.

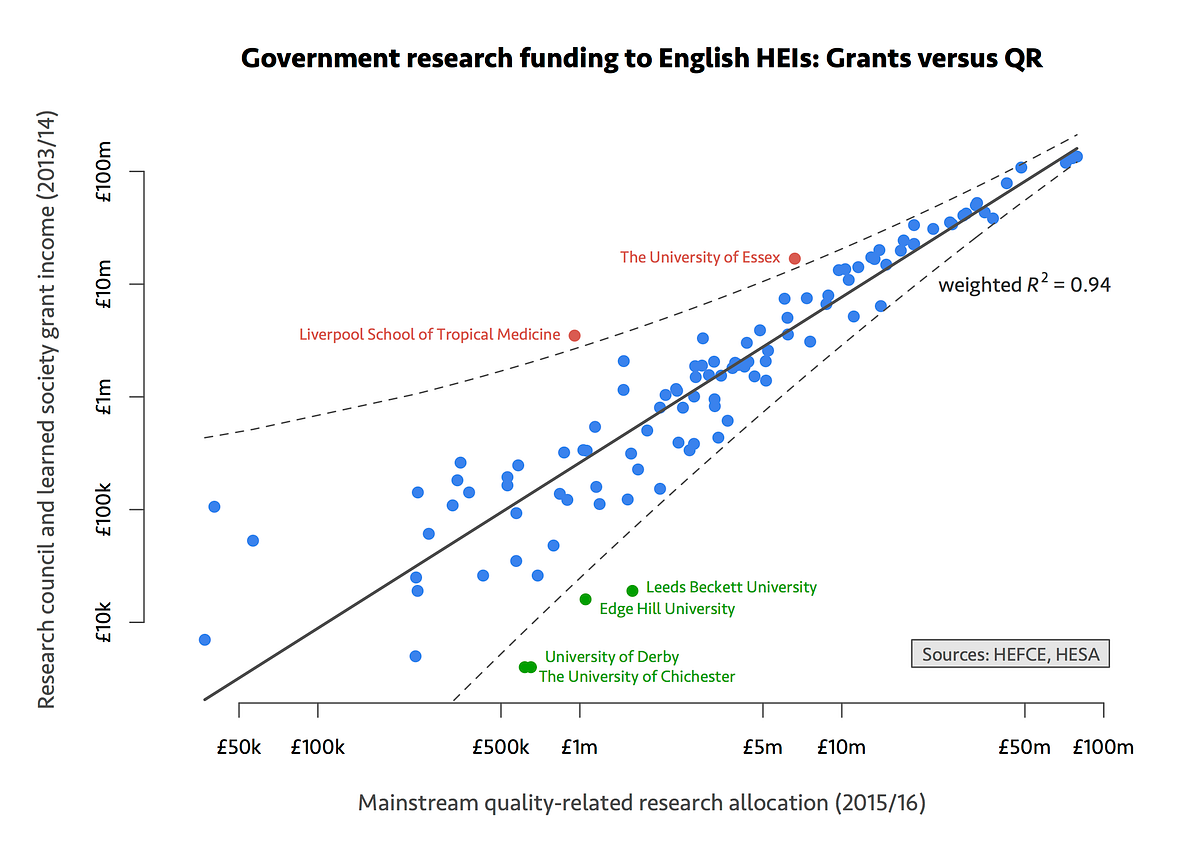

Despite that report’s wide-ranging analysis of the current dual funding structure, it did not directly show the relationship between grants and QR, nor expand on the details of the model that the authors fitted. So, armed with HEFCE’s 2015/16 QR allocation data, which are based on the results of the REF and publicly available, as well as data on grant income kindly provided by HESA, I set out to explore the relationship myself.

A linear trend is immediately obvious. (It should be pointed out, however, that the analysis only covers England, and includes just one year’s data on each axis.) Since allocations vary by several orders of magnitude I plotted the data and fitted the model on a log–log scale. In these terms, there is greater spread around the core relationship where the numbers are small, so the linear model was fitted using a weighted regression, to give the lower end of the scale less influence. The dashed lines represent the 95 percent prediction interval, a range that additional data points would generally be expected to fall within. Individual institutions which fall outside that range are coloured differently and labelled.

The relationship between these income measures is clearly strong, and even those examples which are apparently less conformant are readily accounted for. All four institutions coloured green got a much smaller proportion of their grant funding from research councils and learned societies than the average of 30 percent — with the remainder coming from other government sources, the EU, charities and industry — and for Essex the proportion is much higher, at 74 percent. So if the income division for these institutions had been more typical, they would all have been closer to the fit line.

Others have proposed that the REF be scrapped, in favour of an allocation in proportion to grant income, and this analysis supports the feasibility of such an arrangement in general terms. While potentially more controversial, the converse is also possible, whereby the quality of research outputs would displace project grants as the only basis for government fund allocation. I have argued previously that the principal investigator–led competitive grant system is wasteful, distorting and off-putting for many talented post-docs, that it can disincentivise collaboration, and that it may help reinforce disparities in academic success between the sexes. But whichever way one looks at it — and I suspect that academics and administrators will view it differently — this expensive double-counting exercise is surely not the best we can do.

And yet, we must not lose sight of the need for transparency. Whatever system is used for doling out government funding to the universities, their use of taxpayers’ money must ultimately be justified. The challenge is to be as clear and as fair as possible, without creating incentive structures that are ultimately detrimental to the success of the very process that we set out to measure. Some truly fresh thinking is needed, and I don’t think anything should be off the table.

Assessing quality really is hard, and the REF was not without its critics. But at least it set out to focus on what is truly important: the quality of research that the UK’s universities are producing, and its influence on the wider world. We would do well to spend less time and money assessing that, and more on working out how to make it even better.